Under the General Data Protection Regulation (GDPR) enforced by the European Union, we are committed to safeguarding your personal data and providing you with control over its use.

GIGAPOD - Scalable, Turnkey AI Supercomputing Solution

Scalable, Turnkey AI Supercomputing Solution

Unleash a Turnkey AI Data Center with High Throughput and an Incredible Level of Compute

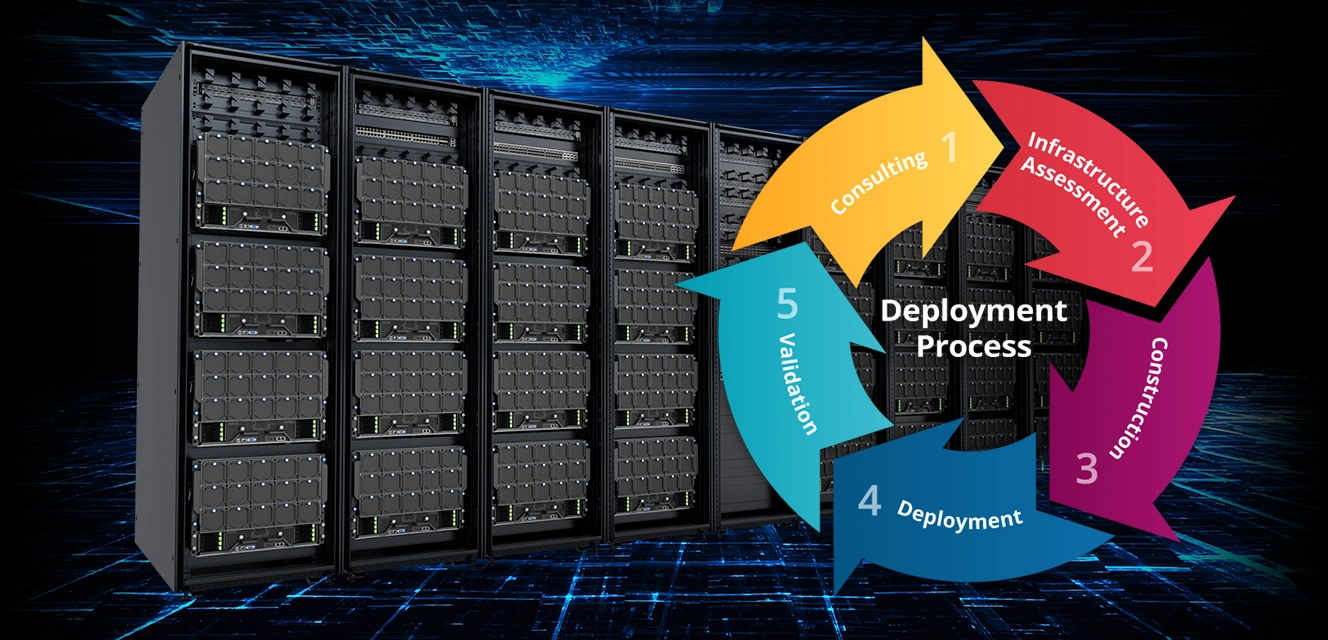

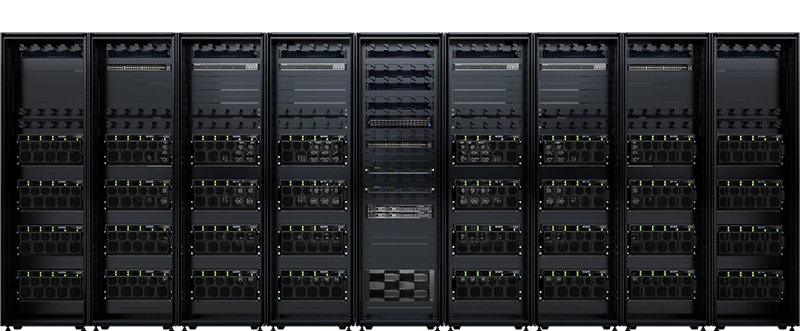

Giga Computing has been pivotal in providing its technology leaders with a supercomputing infrastructure built around powerful GIGABYTE GPU servers that house either NVIDIA H200 Tensor Core GPUs or AMD Instinct™ MI300 Series accelerators. GIGAPOD is a service that has professional help to create a cluster of racks all interconnected as a cohesive unit. An AI ecosystem platform thrives with a high degree of parallel processing as the GPUs are interconnected with blazing fast communication by NVIDIA NVLink or AMD Infinity. Fabric. With the introduction of the GIGAPOD, Giga Computing now offers a one-stop source for data centers that are moving to an AI factory that runs deep learning models at scale. The hardware, expertise, and close relationship with cutting-edge GPU partners ensures the deployment of an AI supercomputer goes off without a hitch and minimal downtime.

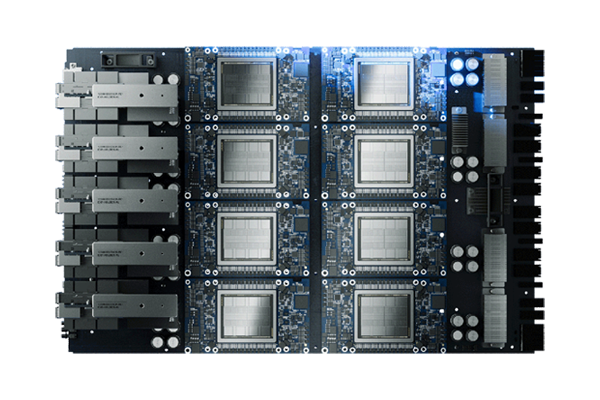

GIGABYTE G Series Servers Built for 8-GPU Platforms

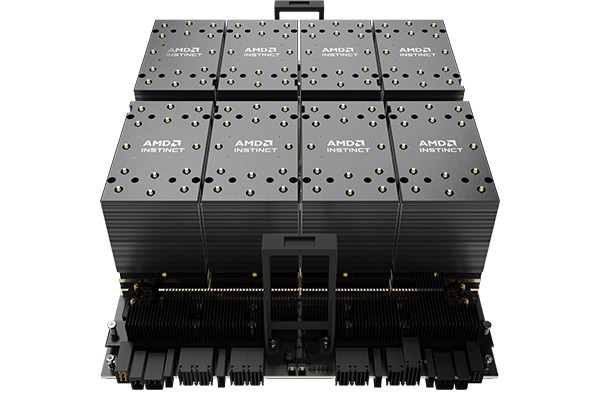

One of the most important considerations when planning a new AI data center is the selection of hardware, and in this AI era, many companies see the choice of the GPU/Accelerator as the foundation. Each of Giga Computing’s industry leading GPU partners (AMD, Intel, and NVIDIA) has innovated uniquely advanced products built by a team of visionary and passionate researchers and engineers, and as each team is unique, each new generational GPU technology has advances that make it ideal for particular customers and applications. This consideration of which GPU to build from is mostly based on factors: performance (AI training or inference), cost, availability, ecosystems, scalability, efficiency, and more. The decision isn’t easy, but Giga Computing aims to provide choices, customization options, and the know-how to create ideal data centers to tackle the demand and increasing parameters in AI/ML models.

NVIDIA HGX™ H200/H100

AMD Instinct™ MI300X

Intel® Gaudi® 3

Why is GIGAPOD the rack scale service to deploy?

-

Industry Connections

Giga Computing works closely with technology partners - AMD, Intel, and NVIDIA - to ensure a fast response to customers requirements and timelines. -

Depth in Portfolio

GIGABYTE servers (GPU, Compute, Storage, & High-density) have numerous SKUs that are tailored for all imaginable enterprise applications. -

Scale Up or Out

A turnkey high-performing data center has to be built with expansion in mind so new nodes or processors can effectively become integrated. -

High Performance

From a single GPU server to a cluster, Giga Computing has tailored its server and rack design to guarantee peak performance with optional liquid cooling. -

Experienced

Giga Computing has successfully deployed large GPU clusters and is ready to discuss the process and provide a timeline that fulfills customers requirements..

The Future of AI Computing in Data Centers

The Ideal GIGAPOD for You

Air Cooling

| Ver. | GPUs Supported | GPU Server (Form Factor) |

GPU Servers per Rack |

Rack | Power Consumption per Rack |

RDHx | |

|---|---|---|---|---|---|---|---|

| 1 |  |

NVIDIA HGX™ H100/H200 AMD Instinct™ MI300X |

5U | 4 | 9 x 42U | 50kW | No |

| 2 |  |

NVIDIA HGX™ H100/H200 AMD Instinct™ MI300X |

5U | 4 | 9 x 48U | 50kW | Yes |

| 3 |  |

NVIDIA HGX™ H100/H200 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | Yes |

| 4 |  |

NVIDIA HGX™ H200/B200 | 8U | 4 | 9 x 42U | 130kW | No |

| 5 |  |

NVIDIA HGX™ H200/B200 | 8U | 4 | 9 x 48U | 130kW | No |

Direct Liquid Cooling

| Ver. | GPUs Supported | GPU Server (Form Factor) |

GPU Servers per Rack |

Rack | Power Consumption per Rack |

CDU | |

|---|---|---|---|---|---|---|---|

| 1 |  |

NVIDIA HGX™ H100/H200 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | In-rack |

| 2 |  |

NVIDIA HGX™ H100/H200 AMD Instinct™ MI300X |

5U | 8 | 5 x 48U | 100kW | External |

| 3 |  |

NVIDIA HGX™ H200 AMD Instinct™ MI300X |

4U | 8 | 5 x 42U | 130kW | In-rack |

| 4 |  |

NVIDIA HGX™ H200/B200 | 8U | 8 | 5 x 42U | 130kW | External |

| 5 |  |

NVIDIA HGX™ H200/B200 | 4U | 8 | 5 x 48U | 130kW | In-rack |

| 6 |  |

NVIDIA HGX™ H200/B200 | 4U | 8 | 5 x 48U | 130kW | External |

Discover the GIGAPOD

From one GIGABYTE GPU server to eight racks with 32 GPU nodes (a total of 256 GPUs), GIGAPOD has the infrastructure to scale, achieving a high-performance supercomputer. Cutting-edge data centers are deploying AI factories, and it all starts with a GIGABYTE GPU server.

GIGAPOD is more than just a bunch of GPU servers, there are also switches. Not to mention, the complete solution offers hardware, software, and services to deploy with ease.

Applications for GPU Clusters

-

Large Language Models (LLM)

Training models that use billions of parameters while having sufficient HBM/memory is a challenge. And text-based data, as in LLM, thrives with a GPU cluster that has a single scalable unit with over 20 TB of GPU memory, making it ideal in scale. -

Science & Engineering

Research in fields such as physics, chemistry, geology, and biology greatly benefit with the use of GPU accelerated clusters. Simulations and modeling thrive with the parallel processing capability of GPUs. -

Generative AI

Generative AI algorithms can create synthetic data that is used in training AI and it can help automate industrial tasks. This is all possible by a GPU cluster using powerful GPUs with fast Infiniband networking.